AI Taylor Swift is mad. She is calling up Kim Kardashian to complain about her “lame excuse of a husband,” Kanye West. (Kardashian and West are, in actuality, divorced.) She is threatening to skip Europe on her Eras Tour if her followers don’t cease asking her about worldwide dates. She is insulting individuals who can’t afford tickets to her live shows and utilizing an uncommon quantity of profanity. She’s being type of impolite.

However she can be very candy. She offers a vanilla pep discuss: “In case you are having a nasty day, simply know that you’re liked. Don’t quit!” And he or she simply loves the outfit you’re sporting to her live performance.

She can also be a fan creation. Based mostly on tutorials posted to TikTok, many Swifities are utilizing a program to create hyper-realistic sound bites utilizing Swift’s voice after which circulating them on social media. The software, the beta of which was launched in late January by ElevenLabs, provides “Instantaneous Voice Cloning.” In impact, it lets you add an audio pattern of an individual’s voice and make it say no matter you need. It’s not excellent, nevertheless it’s fairly good. The audio has some tonal hitches right here and there, nevertheless it tends to sound fairly pure—shut sufficient to idiot you when you aren’t paying sufficient consideration. Darkish corners of the web instantly used it to make celebrities say abusive or racist issues; ElevenLabs mentioned in response that it “can hint again any generated audio to the consumer” and would contemplate including extra guardrails—equivalent to manually verifying each submission.

Whether or not it’s completed that is unclear. After I forked over $1 to strive the know-how myself—a reduced price for the primary month—my add was accepted almost immediately. The slowest a part of the method was discovering a transparent one-minute audio clip of Swift to make use of as a supply for my customized AI voice. As soon as that was accepted, I used to be in a position to make use of it to create pretend audio immediately. Your entire course of took lower than 5 minutes. ElevenLabs declined to remark about its insurance policies or the power to make use of its know-how to pretend Taylor Swift’s voice, nevertheless it offered a hyperlink to its pointers about voice cloning. The corporate advised The New York Occasions earlier this month that it desires to create a “common detection system” in collaboration with different AI builders.

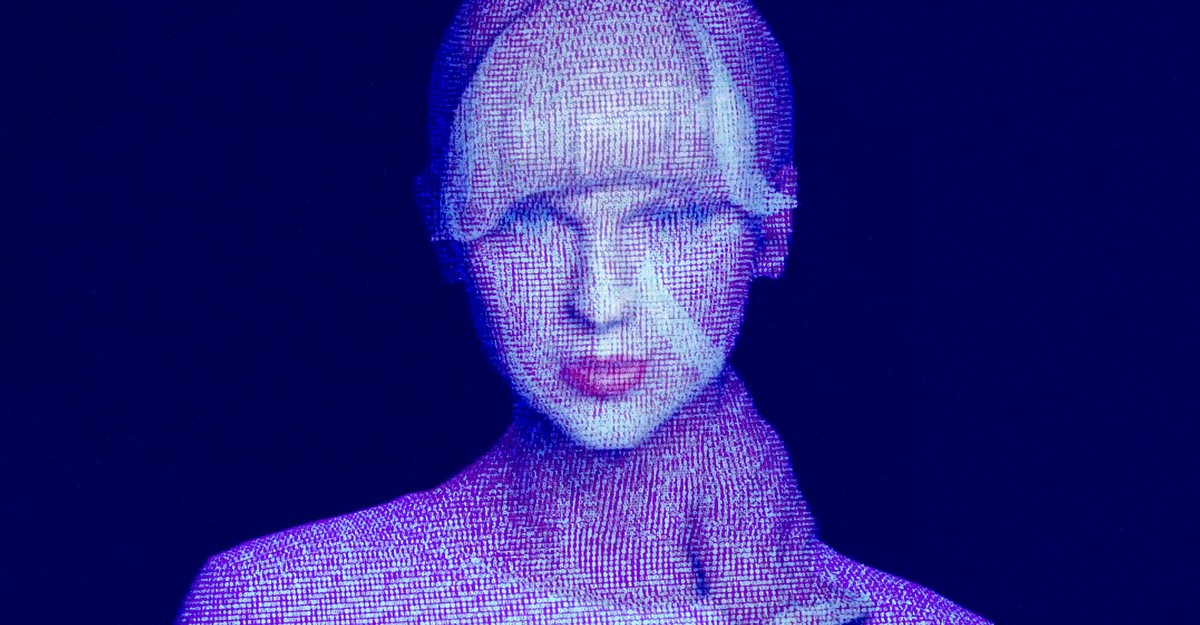

The arrival of AI Taylor Swift appears like a teaser for what’s to return in an odd new period outlined by artificial media, when the boundaries between actual and faux would possibly blur into meaninglessness. For years, consultants have warned that AI would lead us to a way forward for infinite misinformation. Now that world is right here. However despite apocalyptic expectations, the Swift fandom is doing simply superb (for now). AI Taylor reveals us how human tradition can evolve alongside increasingly complicated know-how. Swifties, for probably the most half, don’t appear to be utilizing the software maliciously: They’re utilizing it for play and to make jokes amongst themselves. Giving followers this software is “like giving them a brand new type of pencil or a paintbrush,” explains Andrea Acosta, a Ph.D. candidate at UCLA who research Okay-pop and its fandom. They’re exploring inventive makes use of of the know-how, and when somebody appears to go too far, others in the neighborhood aren’t afraid to say so.

In some methods, followers is likely to be uniquely nicely ready for the fabricated future: They’ve been having conversations about the ethics of utilizing actual individuals in fan fiction for years. And though each fandom is totally different, researchers say these communities are likely to have their very own norms and be considerably self-regulating. They are often a number of the web’s most diligent investigators. Okay-pop followers, Acosta advised me, are so good at parsing what’s actual and what’s pretend that typically they handle to cease misinformation about their favourite artist from circulating. BTS followers, for instance, have been recognized to name out factual inaccuracies in revealed articles on Twitter.

The chances for followers trace at a lighter facet of audio and video produced by generative AI. “There [are] plenty of fears—and plenty of them are very justified—about deepfakes and the way in which that AI goes to type of play with our perceptions of what actuality is,” Paul Sales space, a professor at DePaul College who has studied fandoms and know-how for twenty years, advised me. “These followers are type of illustrating totally different parts of that, which is the playfulness of know-how and the way in which that it will probably all the time be used within the type of enjoyable and possibly extra participating methods.”

However AI Taylor Swift’s viral unfold on TikTok provides a wrinkle to those dynamics. It’s one factor to debate the ethics of so-called real-person fiction amongst followers in a siloed nook of the web, however on such a big and algorithmically engineered platform, the content material can immediately attain an enormous viewers. The Swifties enjoying with this know-how share a data base, however different viewers might not. “They know what she has mentioned and what she hasn’t mentioned, proper? They’re nearly instantly in a position to clock, Okay, that is an AI; she by no means mentioned that,” Lesley Willard, this system director for the Middle for Leisure and Media Industries on the College of Texas at Austin, advised me. “It’s after they depart that house that it turns into extra regarding.”

Swifties on TikTok are already establishing norms concerning the voice AI, based mostly a minimum of partly on how Swift herself would possibly really feel about it. “If a bunch of individuals begin saying, ‘Possibly this isn’t a good suggestion. It may very well be negatively affecting her,’” one 17-year-old TikTok Swiftie named Riley advised me, “most individuals actually simply take that to coronary heart.” Maggie Rossman, a professor at Bellarmine College who research the Swift fandom, thinks that if Taylor have been to return out in opposition to particular sound bites or sure makes use of of the AI voice, then “we’d see it shut down amongst a part of the fandom.”

However that is difficult territory for artists. They don’t essentially wish to squash their followers’ creativity and the sense of neighborhood it builds—fan tradition is sweet for enterprise. Within the new world, they’ll need to navigate the stress between permitting some remixing whereas sustaining possession of their voice and popularity.

A consultant for Swift didn’t reply to a request for touch upon how she and her crew are enthusiastic about this know-how, however followers are satisfied that she’s listening. After her official TikTok account “preferred” one video utilizing the AI voice, a commenter exclaimed, “SHES HEARD THE AUDIO,” following up with three crying emoji.

TikTok, for its half, simply launched new neighborhood pointers for artificial media. “We welcome the creativity that new synthetic intelligence (AI) and different digital applied sciences might unlock,” the rules say. “Nevertheless, AI could make it harder to differentiate between truth and fiction, carrying each societal and particular person dangers.” The platform doesn’t enable AI re-creations of personal individuals, however offers “extra latitude” for public figures—as long as the media is recognized as being AI-generated and adheres to the corporate’s different content material insurance policies, together with these about misinformation.

However boundary-pushing Swift followers can in all probability trigger solely a lot hurt. They could destroy Ticketmaster, certain, however they’re unlikely to result in AI armageddon. Sales space thinks about all of this when it comes to “levels of fear.”

“My fear for fandom is, like, Oh, individuals are going to be confused and upset, and it might trigger stress,” he mentioned. “My fear with [an AI fabrication of President Joe] Biden is, like, It’d trigger a nuclear apocalypse.”